What's New:

AMD GPU Support + More

Our internal Ollama provider was bumped to the latest version (0.3.14) which includes support for AMD GPUs, as well as other improvements.

For Windows, we install the additional support files during the installation process automatically. For MacOS, there is nothing to do.

Import any Ollama Model Tag or Hugging Face Model

You can now import any Ollama model tag or Hugging Face model into AnythingLLM using the default Ollama provider. Simply enter the tag or URL and hit import. This allows you to use models that are not explicitly listed in the UI.

Just paste in the ollama run command and hit import!

Pulling from Ollama.com (opens in a new tab)

example: ollama run mistral-nemo

Pulling from Hugging Face (opens in a new tab)

example: ollama run hf.co/bartowski/Llama-3.2-1B-Instruct-GGUF

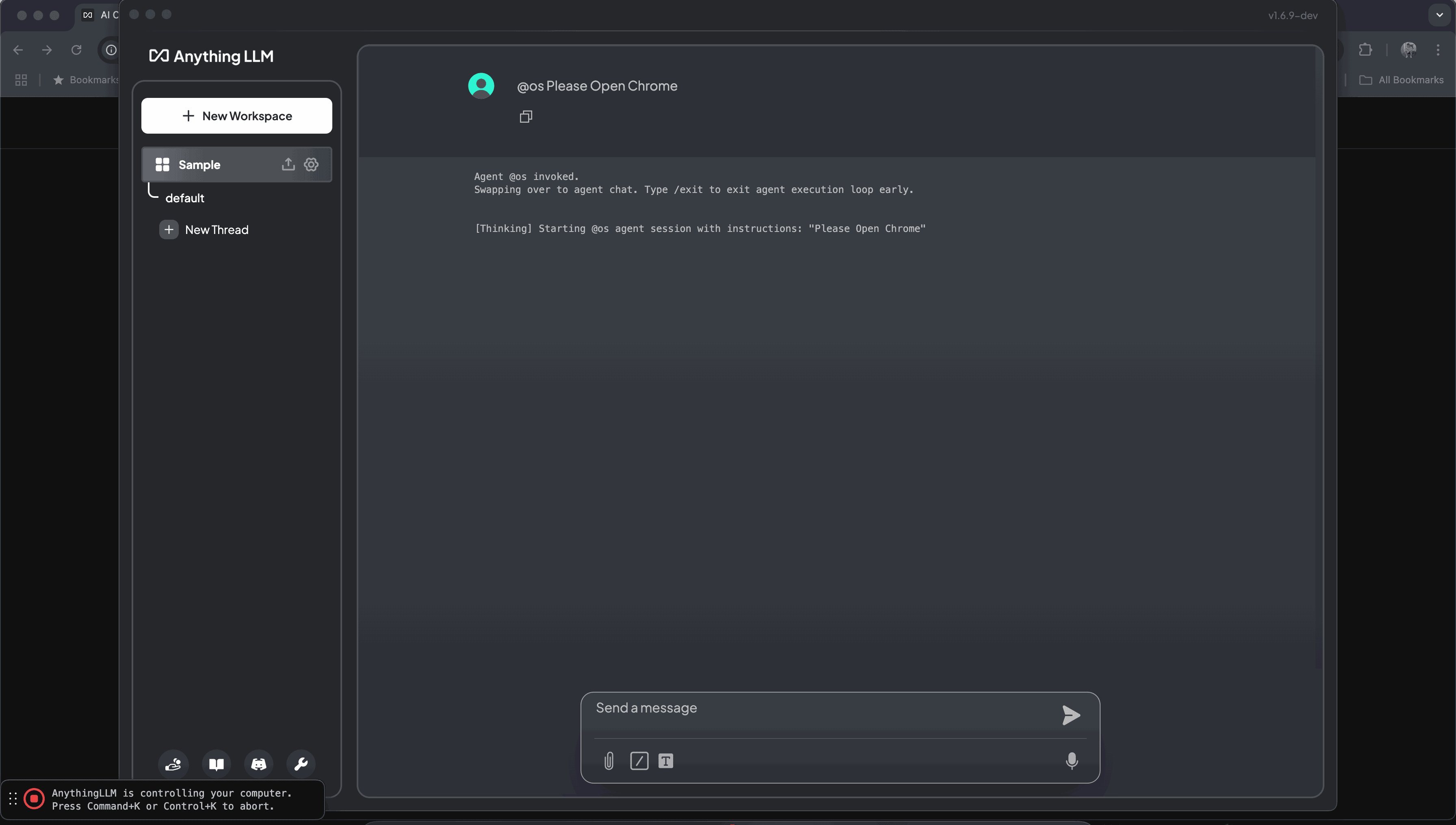

Computer Use (Anthropic AI)

AnythingLLM can now leverage the new Anthropic AI Computer Use models.

This is an experimental feature (opens in a new tab) and must be explicitly enabled in your system settings.

Find-in-page support for workspace chat

You can now find specific text within the workspace chat window. Simply press Ctrl+F to open the finder input at the top-right of the chat window.

Other Improvements:

- Added NovitaAI (opens in a new tab) as a supported LLM Provider

- Improved document metadata for embedding/RAG results

- Added Session Token support for AWS BedRock inference

- Added API docs update

- Added API Limit/orderBy for

workspace/chatsendpoint - Added support for INO filetype

Bug Fixes:

- Patch restriction where localhost address web scraping was blocked.

- Patch bad reference for Ephemeral agent invocation

- Fixed issue where files with non-latin characters were not being respected when uploaded via API

What's Next:

- Community Hub for Agent skills, workspace sharing, and more. Pull Request #2555 (opens in a new tab)

- True dark mode and light mode UI Pull Request #2481 (opens in a new tab)