Connecting to Ollama is a very simple process, but sometimes things can appear to not being working depending on if you are using the AnythingLLM Desktop version or running AnythingLLM via Docker.

In general, all AnythingLLM instances just need a valid URL to connect to Ollama running anywhere, however there can be some nuances depending on how you are running AnythingLLM or Ollama - in any case, all that is needed is a reachable URL to connect to Ollama.

The most common issue people run into is trying to use localhost or 127.0.0.1 to connect to Ollama running on their local machine when running AnythingLLM via Docker - see the Troubleshooting (Docker) section for how to fix this.

General Troubleshooting (Desktop & Docker)

On both the Desktop and Docker versions of AnythingLLM, the Ollama URL is automatically detected if we can detect it. If the Ollama URL is not detected, you will need to manually set the Ollama URL in the AnythingLLM settings.

The list of automatically detected URLs is as follows:

http://127.0.0.1:11434http://host.docker.internal:11434http://172.17.0.1:11434

If your Ollama URL is not detected because it is not in the list above, you will need to manually set the Ollama URL in the AnythingLLM settings - which will be shown in the UI for you to modify.

Ensure Ollama server is Running

Before attempting any fixes or URL changes, verify that Ollama is running properly on your device:

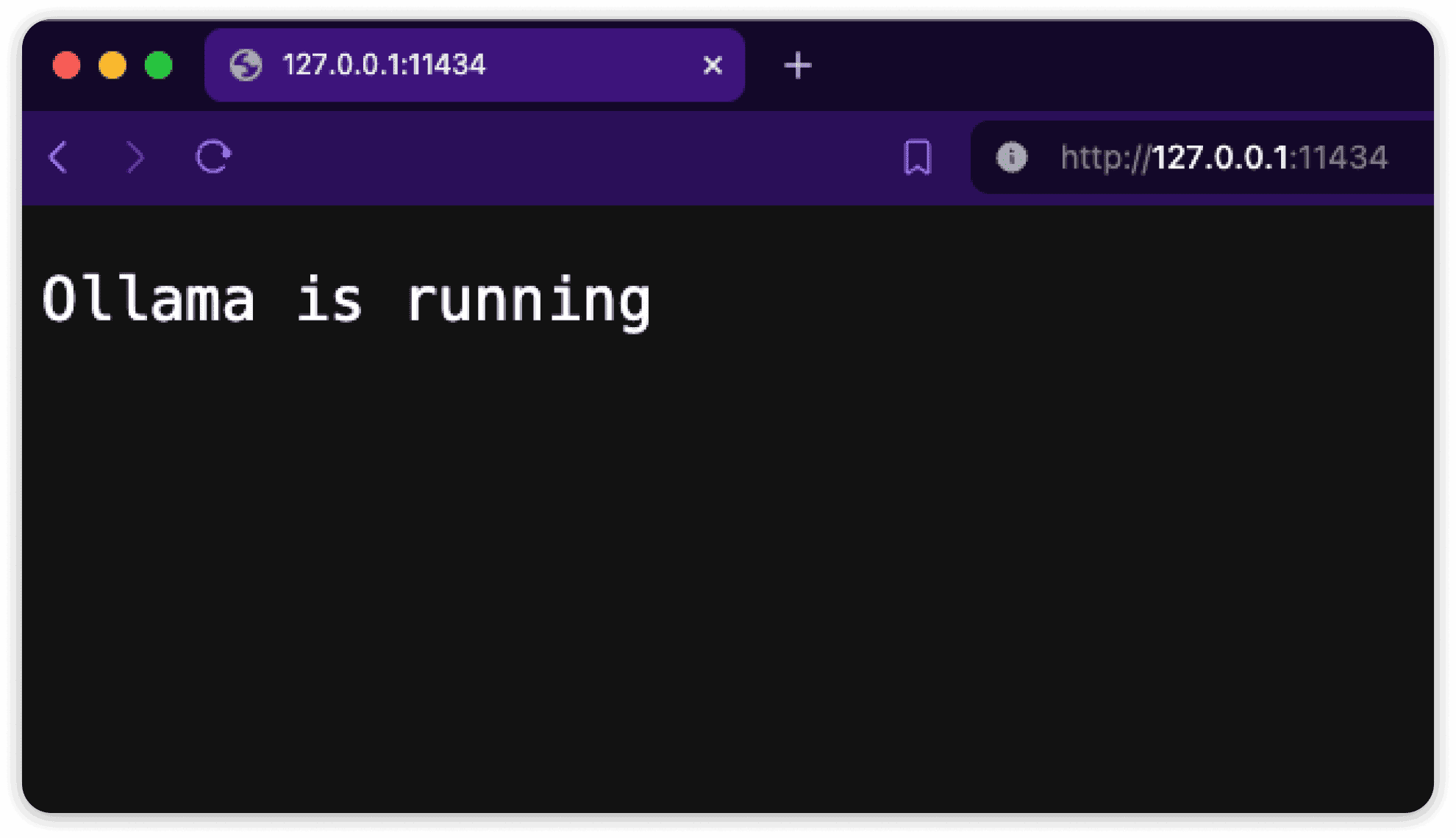

- Open your web browser and navigate to

http://127.0.0.1:11434 - You should see a page similar to this:

If you don't see this page, troubleshoot your Ollama installation and ensure that it is running properly before moving forward as well as make sure you run the ollama serve command.

Most of the time, Ollama will automatically start the server when ollama is running.

Running ollama run model-name will not start the server - this is only for running models in your command line and you will not be able to use the Ollama API with this command.

Troubleshooting (Docker)

If you are running AnythingLLM via Docker and you are trying to connect to Ollama running locally on your machine.

If you are seeing no models loaded in AnythingLLM or getting error responses from Ollama - 100% of the time this is beacause you are using the wrong URL in the connection in AnythingLLM.

localhost and 127.0.0.1 do not work on Docker.

On Docker, localhost and 127.0.0.1 are not valid URLs for the Docker container Ollama connection in AnythingLLM because both of these refer to the container network and not the host machine.

To fix this, you can use the host.docker.internal (Windows/MacOS) or 172.17.0.1 (Linux) URLs to connect to the host machine from the Docker container with the same port (default 11434).

Running Docker on Windows or MacOS (available since Docker version 18.03 (opens in a new tab)):

http://localhost:11434 => http://host.docker.internal:11434

http://127.0.0.1:11434 => http://host.docker.internal:11434Running Docker on Linux:

http://localhost:11434 => http://172.17.0.1:11434

http://127.0.0.1:11434 => http://172.17.0.1:11434Troubleshooting (Remote Ollama)

If you are running AnythingLLM via Docker and are trying to connect to Ollama running on another machine the underlying principle is the same where the Ollama URL is the IP address of the machine running Ollama.

In the case of a remote Ollama, the Ollama URL is the IP address of the machine running Ollama and it is your responsibility to ensure that the IP address is correct, your firewall rules are correct, and that the machine is running ollama. There is no way for AnythingLLM to automatically detect the IP address of the machine running Ollama.

AnythingLLM Cloud + Local Ollama

You cannot connect to Ollama running on your local machine when using AnythingLLM Cloud. This would require you to expose your local machine to the internet long-term via a service like ngrok (opens in a new tab) which is not recommended and not secure.

While it is possible, we do not recommend it and it is your discretion to do so if you understand the security implications of SSH tunneling your local machine to the internet. We will not provide support for any issues related to exposing your local machine to the internet.